- Sun 14 May 2017

- Publication

- Xue Bin Peng, Glen Berseth, KangKang Yin, Michiel van de Panne

- #News, #RL, #Locomotion

Abstract

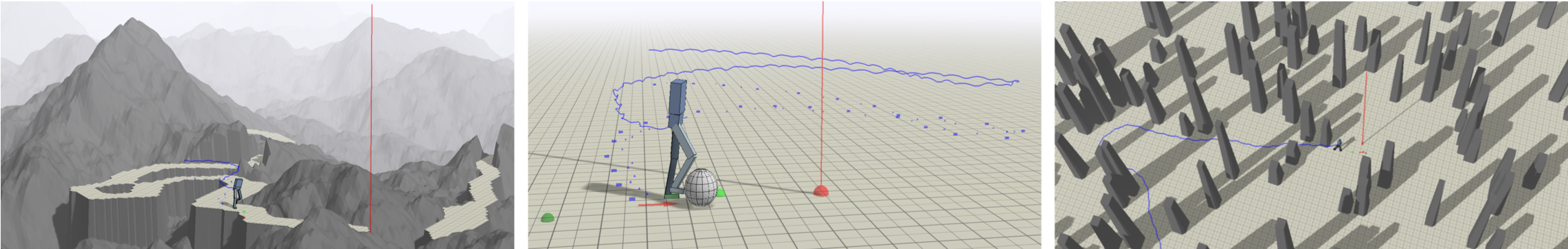

Learning physics-based locomotion skills is a difficult problem, leading to solutions that typically exploit prior knowledge of various forms. In this paper, we aim to learn a variety of environment-aware locomotion skills with a limited amount of prior knowledge. We adopt a two-level hierarchical control framework. First, low-level controllers are learned that operate at a fine timescale and which achieve robust walking gaits that satisfy stepping-target and style objectives. Second, high-level controllers are then learned which plan at the timescale of steps by invoking desired step targets for the low-level controller. The high-level controller makes decisions directly based on high-dimensional inputs, including terrain maps or other suitable representations of the surroundings. Both levels of the control policy are trained using deep reinforcement learning. Results are demonstrated on a simulated 3D biped. Low-level controllers are learned for a variety of motion styles and demonstrate robustness with respect to force-based disturbances, terrain variations, and style interpolation. High-level controllers are demonstrated that are capable of following trails through terrains, dribbling a soccer ball towards a target location, and navigating through static or dynamic obstacles.

Files

Paper Paper supplimentary material

Videos!

Bibtex

@article{2017-TOG-deepLoco,

title={DeepLoco: Dynamic Locomotion Skills Using Hierarchical Deep Reinforcement Learning},

author={Xue Bin Peng and Glen Berseth and KangKang Yin and Michiel van de Panne},

journal = {ACM Transactions on Graphics (Proc. SIGGRAPH 2017)},

volume = 36,

number = 4,

article = 41,

year={2017}

}

Acknowledgements

We thank the anonymous reviewers for their helpful feedback. This research was funded in part by an NSERC Discovery Grant (RGPIN-2015-04843).